Human Activity Recognition using Machine Learning Approach

DOI:

https://doi.org/10.18196/jrc.25113Keywords:

KNN, Human Activity Recognition, SVM, RHA.Abstract

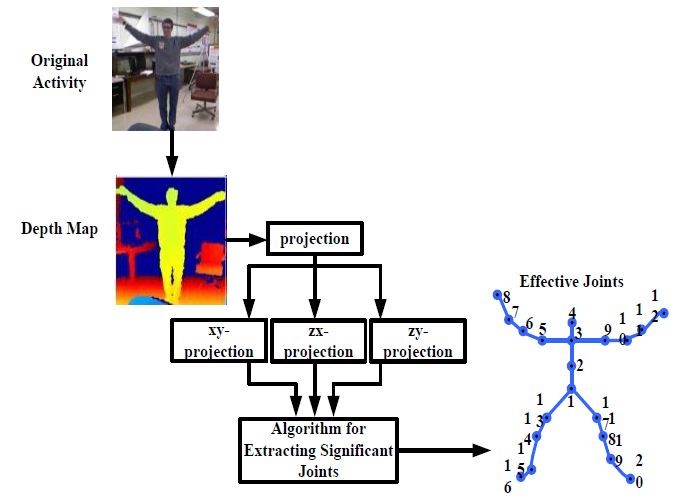

The growing development in the sensory implementation has facilitated that the human activity can be used either as a tool for remote control of the device or as a tool for sophisticated human behaviour analysis. With the aid of the skeleton of the human action input image, the proposed system implements a basic but novel process that can only recognize the significant joints. The proposed system contributes a cost-effective human activity recognition system along with efficient performance in recognizing the significant joints. A template for an activity recognition system is also provided in which the reliability of the process of recognition and system quality is preserved with a good balance. The research presents a condensed method of extraction of features from spatial and temporal features of event feeds that are further subject to the mechanism of machine learning to improve the performance of recognition. The significance of the proposed study is reflected in the results, which when trained using KNN, show higher accuracy performance. The proposed system demonstrated 10-15% of memory usage over 532 MB of digitized real-time event information with 0.5341 seconds of processing time consumption. Therefore on a practical basis, the supportability of the proposed system is higher. The outcomes are the same for both real-time object flexibility captures and static frames as well.

References

N. Käse, M. Babaee and G. Rigoll, "Multi-view human activity recognition using motion frequency," 2017 IEEE International Conference on Image Processing (ICIP), Beijing, 2017, pp. 3963-3967.

D. Tao, L. Jin, Y. Yuan and Y. Xue, "Ensemble Manifold Rank Preserving for Acceleration- Based Human Activity Recognition," in IEEE Transactions on Neural Networks and Learning Systems, vol. 27, no. 6, pp. 1392-1404, June 2016.

H. Zhang and L. E. Parker, "CoDe4D: Color-Depth Local Spatio-Temporal Features for Human Activity Recognition From RGB-D Videos," in IEEE Transactions on Circuits and Systems for Video Technology, vol. 26, no. 3, pp. 541-555, March 2016.

W. Lu, F. Fan, J. Chu, P. Jing and S. Yuting, "Wearable Computing for the Internet of Things: A Discriminant Approach for Human Activity Recognition," in IEEE Internet of Things Journal, vol. 6, no. 2, pp. 2749-2759, April 2019.

J. Qi, Z. Wang, X. Lin and C. Li, "Learning Complex Spatio-Temporal Configurations of Body Joints for Online Activity Recognition," in IEEE Transactions on Human-Machine Systems, vol. 48, no. 6, pp. 637-647, Dec. 2018.

Abdul Lateef Haroon P.S., U.Eranna, “Insights of Research-based approaches in Human Activity Recognition” Communications on Applied Electronics (CAE), Volume 7- No.16, May- 2018

Abdul Lateef Haroon P.S., U.Eranna, “An Efficient Activity Detection System based on Skeletal Joints Identification” International Journal of Electrical and Computer Engineering (IJECE), Vol.8, No.6, December-2018, pp. 4995~5003.

Abdul Lateef Haroon P.S., U.Eranna, “A Simplified Machine Learning Approach for Recognizing Human Activity” International Journal of Electrical and Computer Engineering (IJECE), Vol.9, No.5, October-2019, pp. 3465~3473.

Abdul Lateef Haroon P.S., U.Eranna, “Modelling Approach to select Potential Joints for Enhanced Action Identification of Human” 3rd IEEE International Conference on Electrical, Electronics, Communication, Computer Technologies and Optimization Techniques (ICEECCOT-2018), 2018.

Abdul Lateef Haroon P.S., U.Eranna, “Human Activity Identification using Novel Feature Extraction and Ensemble-based learning for Accuracy” Artificial Intelligence Methods in Intelligent Algorithms, Proceedings of 8th Computer Science On-line Conference, Vol.2, 2019.

Y. Guo et al., "Tensor Manifold Discriminant Projections for Acceleration-Based Human Activity Recognition," in IEEE Transactions on Multimedia, vol. 18, no. 10, pp. 1977-1987, Oct. 2016.

L. Peng, L. Chen, X. Wu, H. Guo and G. Chen, "Hierarchical Complex Activity Representation and Recognition Using Topic Model and Classifier Level Fusion," in IEEE Transactions on Biomedical Engineering, vol. 64, no. 6, pp. 1369-1379, June 2017.

J. Cheng, H. Liu, F. Wang, H. Li and C. Zhu, "Silhouette Analysis for Human Action Recognition Based on Supervised Temporal t-SNE and Incremental Learning," in IEEE Transactions on Image Processing, vol. 24, no. 10, pp. 3203-3217, Oct. 2015.

X. Chang, W. Zheng and J. Zhang, "Learning Person–Person Interaction in Collective Activity Recognition," in IEEE Transactions on Image Processing, vol. 24, no. 6, pp. 1905-1918, June 2015.

M. Ehatisham-Ul-Haq et al., "Robust Human Activity Recognition Using Multimodal Feature-Level Fusion," in IEEE Access, vol. 7, pp. 60736-60751, 2019.

R. Xi et al., "Deep Dilation on Multimodality Time Series for Human Activity Recognition," in IEEE Access, vol. 6, pp. 53381-53396, 2018.

Z. Yang, O. I. Raymond, C. Zhang, Y. Wan and J. Long, "DFTerNet: Towards 2-bit Dynamic Fusion Networks for Accurate Human Activity Recognition," in IEEE Access, vol. 6, pp. 56750- 56764, 2018.

H. Zou, Y. Zhou, R. Arghandeh and C. J. Spanos, "Multiple Kernel Semi-Representation Learning With Its Application to Device-Free Human Activity Recognition," in IEEE Internet of Things Journal, vol. 6, no. 5, pp. 7670-7680, Oct.

N. Alshurafa et al., "Designing a Robust Activity Recognition Framework for Health and Exergaming Using Wearable Sensors," in the IEEE Journal of Biomedical and Health Informatics, vol. 18, no. 5, pp. 1636-1646, Sept. 2014.

G. Azkune and A. Almeida, “A Scalable Hybrid Activity Recognition Approach for Intelligent Environments,” IEEE Access, vol. 6, pp. 41745–41759, 2018.

C. Wang, Y. Xu, H. Liang, W. Huang, and L. Zhang, “WOODY: A Post-Process Method for Smartphone-Based Activity Recognition,” IEEE Access, vol. 6, pp. 49611–49625, 2018.

R. Xi et al., “Deep Dilation on Multimodality Time Series for Human Activity Recognition,” IEEE Access, vol. 6, pp. 53381–53396, 2018.

H. Wang, Y. Xue, X. Zhen, and X. Tu, “Domain Specific Learning for Sentiment Classification and Activity Recognition,” IEEE Access, vol. 6, pp. 53611–53619, 2018.

A. Yousefzadeh, G. Orchard, T. Serrano-Gotarredona, and B. Linares-Barranco, “Active Perception With Dynamic Vision Sensors. Minimum Saccades With Optimum Recognition,” IEEE Trans. Biomed. Circuits Syst., vol. 12, no. 4, pp. 927–939, Aug. 2018.

Y. Hsu, S. Yang, H. Chang, and H. Lai, “Human Daily and Sport Activity Recognition Using a Wearable Inertial Sensor Network,” IEEE Access, vol. 6, pp. 31715–31728, 2018.

L. Cai, X. Liu, H. Ding, and F. Chen, “Human Action Recognition Using Improved Sparse Gaussian Process Latent Variable Model and Hidden Conditional Random Filed,” IEEE Access, vol. 6, pp. 20047–20057, 2018.

W. Li, B. Tan, and R. Piechocki, “Passive Radar for Opportunistic Monitoring in E-Health Applications,” IEEE J. Transl. Eng. Heal. Med., vol. 6, no. December 2017, pp. 1–10, 2018.

Z. Yang, O. I. Raymond, C. Zhang, Y. Wan, and J. Long, “DFTerNet: Towards 2-bit Dynamic Fusion Networks for Accurate Human Activity Recognition,” IEEE Access, vol. 6, pp. 56750–56764, 2018.

S. Savvaki, G. Tsagkatakis, A. Panousopoulou, and P. Tsakalides, “Matrix and Tensor Completion on a Human Activity Recognition Framework,” IEEE J. Biomed. Heal. Informatics, vol. 21, no. 6, pp. 1554–1561, 2017.

Downloads

Published

How to Cite

Issue

Section

License

Authors who publish with this journal agree to the following terms:

- Authors retain copyright and grant the journal right of first publication with the work simultaneously licensed under a Creative Commons Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this journal.

- Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the journal's published version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial publication in this journal.

- Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See The Effect of Open Access).

This journal is based on the work at https://journal.umy.ac.id/index.php/jrc under license from Creative Commons Attribution-ShareAlike 4.0 International License. You are free to:

- Share – copy and redistribute the material in any medium or format.

- Adapt – remix, transform, and build upon the material for any purpose, even comercially.

The licensor cannot revoke these freedoms as long as you follow the license terms, which include the following:

- Attribution. You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.

- ShareAlike. If you remix, transform, or build upon the material, you must distribute your contributions under the same license as the original.

- No additional restrictions. You may not apply legal terms or technological measures that legally restrict others from doing anything the license permits.

• Creative Commons Attribution-ShareAlike (CC BY-SA)

JRC is licensed under an International License